About Me

I am a graduate student at Cornell Tech and a research assistant at the Computer Systems Laboratory, where I design efficient algorithms for large language models and build scalable ML systems. I received my B.S. in Computer Science (CS) and Electrical and Computer Engineering (ECE) from Cornell University with summa cum laude.

I'm graduating in December 2025 and am actively seeking Software Engineer, Machine Learning Engineer, and Research Engineer opportunities. I'm interested in exploring areas outside my past experiences to broaden my expertise and contribute to diverse technical challenges. My updated resume can be found here.

My recent work explores test-time optimization for diffusion language models, focusing on caching strategies and diffusion scheduling to accelerate inference. In the past, I have worked on recommendation systems (retrieval and ranking) and DNN model quantization.

I am broadly interested in ML systems optimization, distributed computing, and efficient inference for large-scale AI, with an emphasis on connecting research to practical and scalable solutions. I have been fortunate to collaborate with Prof. Zhiru Zhang, Prof. Udit Gupta, Prof. Mohamed Abdelfattah, and Prof. Jae-sun Seo throughout my academic journey.

Research

FlashDLM: Test-Time Optimization for Diffusion LLM Inference

Novel caching strategies and guided diffusion for 34× inference speedup

Thanks to my collaborators Jian Meng and Yash Akhauri for their valuable contributions to this work.

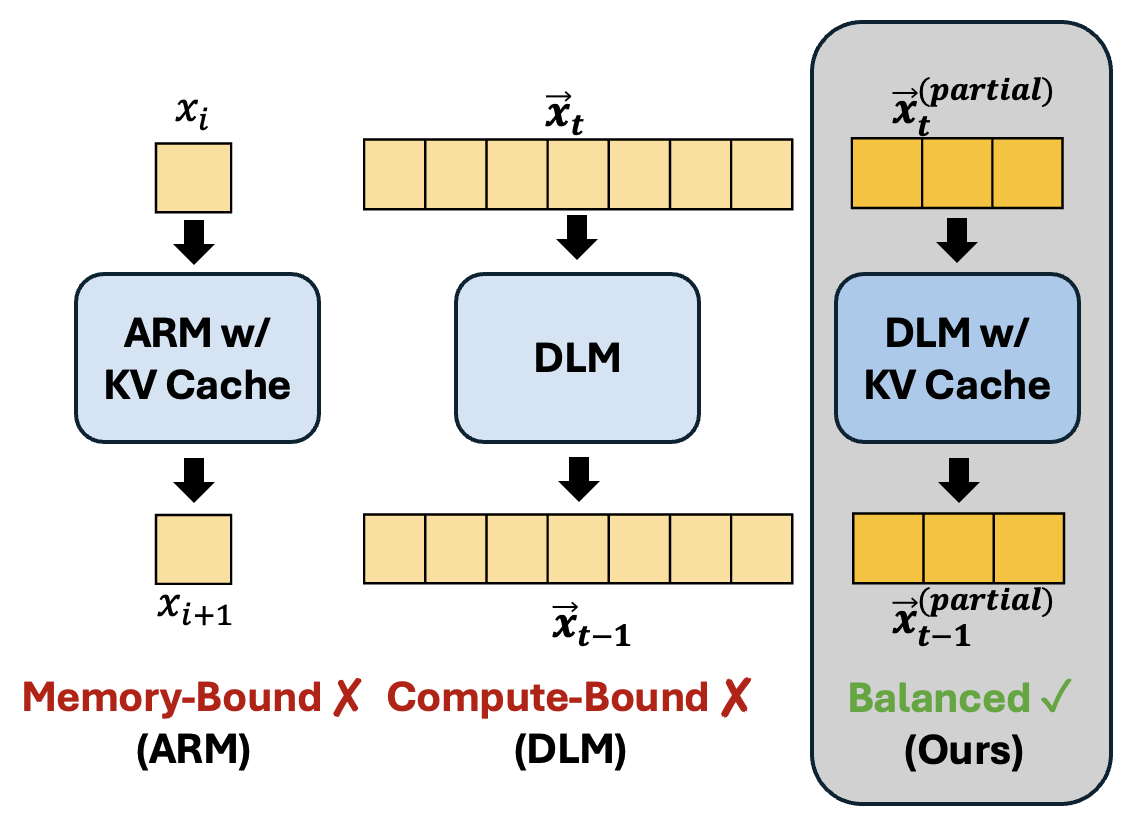

Diffusion language models enable parallel token generation and bidirectional context but suffer from slow inference due to iterative denoising, making them impractical for long context reasoning compared to autoregressive models.

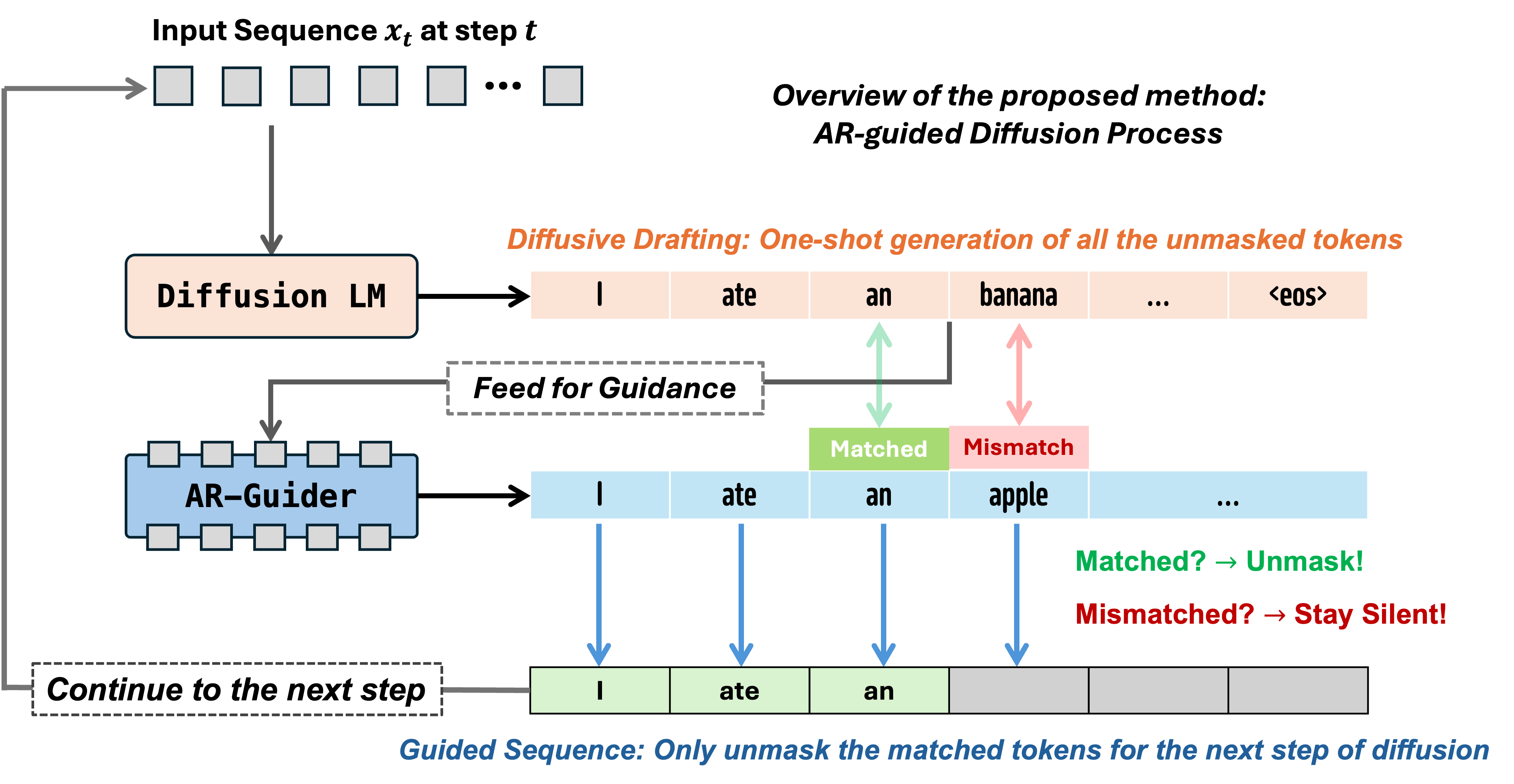

This work introduces FreeCache and Guided Diffusion, two training-free techniques for accelerating diffusion inference. FreeCache reuses stable key-value projections across steps, and Guided Diffusion employs a lightweight autoregressive model to guide token unmasking, reducing the number of iterations while preserving coherence.

Together these methods achieve up to 34× end-to-end speedup with negligible accuracy loss, making diffusion models as efficient as autoregressive baselines and enabling their deployment in real-world applications.

FreeCache

GuidedDiffusion

ReCoOpt: Co-Design Framework for Efficient Recommendation System

Hardware-software co-design for end-to-end recommendation optimization

Thanks to my collaborator Mark Zhao for his valuable contributions to this work.

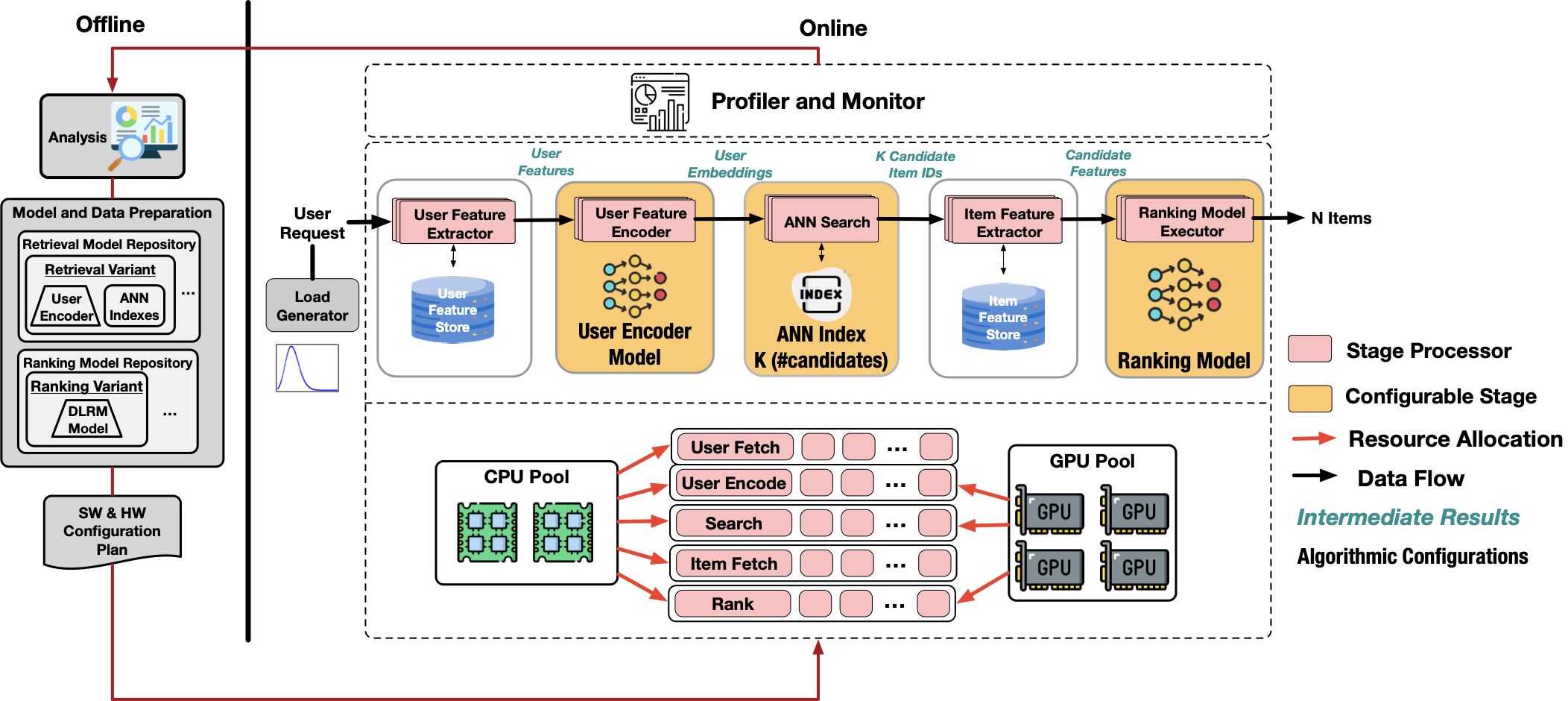

Modern recommendation systems execute multi-stage pipelines at massive scale, but most optimization efforts target only the deep neural network ranking stage, leaving major bottlenecks in feature fetching, approximate nearest neighbor search, and orchestration.

To address this gap, ReCoOpt is introduced as a modular framework that enables systematic hardware and software co-design of end-to-end recommendation inference. The framework profiles and tunes retrieval, feature fetching, and ranking across heterogeneous platforms to explore balanced pipeline configurations.

Using MovieLens datasets, ReCoOpt demonstrates that holistic co-design can improve hit rate by 0.05 under a fixed latency budget, double throughput, or reduce latency by 40%, showing that balanced hardware and software optimization is essential for scalable recommendation engines.

OverQ: Overwrite Quantization for Outlier-Aware CNN Acceleration

Overwrite quantization technique for handling activation outliers in CNNs

Thanks to my mentor Jordan Dotzel for his guidance and support on this work.

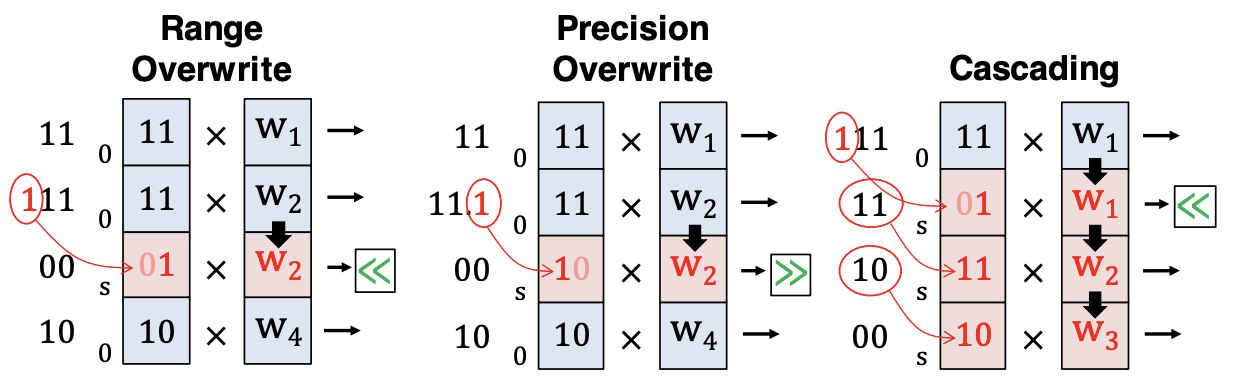

Low precision quantization reduces the cost of deep neural networks but suffers from rare outliers in activations that degrade accuracy. Existing fixes require retraining or expensive outlier hardware.

OverQ introduces overwrite quantization, which lets outliers reuse nearby zeros to gain extra range or precision. A simple cascading mechanism expands coverage, and the design fits efficiently into systolic array accelerators.

With modest overhead, OverQ handles over 90% of outliers, improving ImageNet accuracy by up to 5% at 4 bits while adding only about 10% area per processing element.

Teaching

Course Development: MLSys Teaching Frameworks (Cornell ECE 5545)

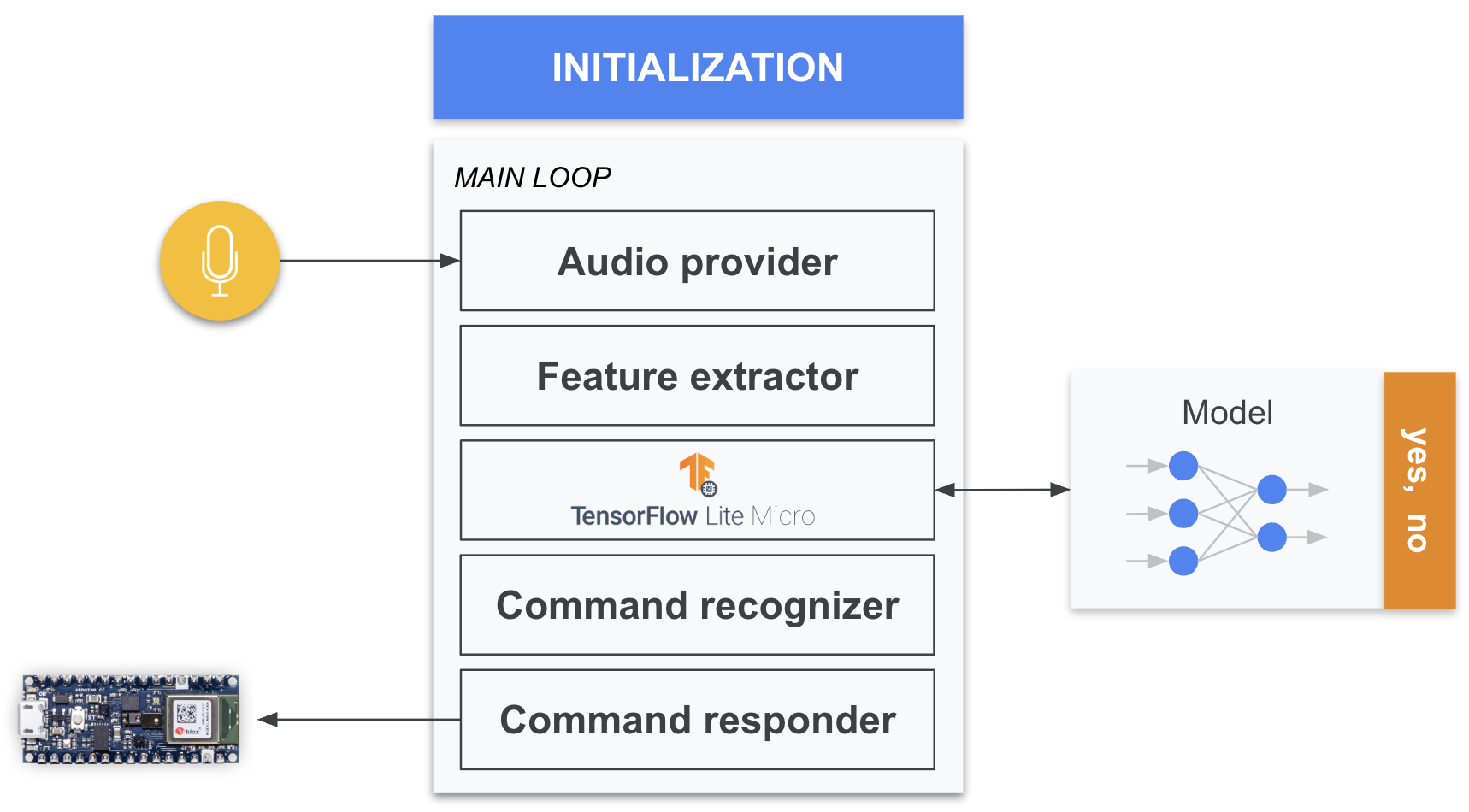

Led development of user-friendly PyTorch frameworks for speech recognition model training, fine-tuning, quantization, and deployment. Wrote TVM tutorials and directed TinyML Keyword Spotting deployment on Arduino Nano 33 BLE.

Teaching Assistant

Served as Teaching Assistant for the following courses at Cornell:

- CS 1110: Introduction to Computing; CS 4/5780: Introduction to Machine Learning; CS 4/5410: Operating Systems; ECE 5755: Modern Computer Systems and Architecture

Technical Projects

Custom Compiler for x86-64

Led team of 3 to build complete compiler in Java targeting x86-64 assembly.

Implemented 12.5K lines of code with lexical analysis, semantic analysis, and optimization passes.

Sokoban Game Engine in OCaml

Designed and implemented a GUI-based Sokoban engine in OCaml using the Graphics Module, supporting interactive rendering and state management.

Extended with multiplayer synchronization, checkpointing, and rule-based constraints.

RISC-V Multicore Processor in Verilog

Implemented quad-core RISC-V processor in Verilog with pipelined execution, bypassing, and variable-latency ALU with iterative multiplier.

Designed memory subsystem and cache hierarchy (two-way set-associative).